Subset SWOT LR L2 Unsmoothed data from AVISO’s FTP Server

This notebook explains how to select and retrieve Unsmoothed (250-m) SWOT LR L2 half orbits from AVISO’s FTP Server, and subset data with a geographical area.

L2 Unsmoothed data can be explored at:

You need to have xarray, numpy, pydap, threddsclient and matplotlib+cartopy (for visualisation) packages installed in your Python environment to execute this notebook.

Tutorial Objectives

Download files through FTP, with selection by cycle and pass numbers

Subset data with geographical selection

Import + code

[1]:

# Install Cartopy with mamba to avoid discrepancies

# ! mamba install -q -c conda-forge cartopy

[2]:

import warnings

warnings.filterwarnings('ignore')

[3]:

import os

from getpass import getpass

import numpy as np

import ftplib

import xarray as xr

[4]:

import cartopy.crs as ccrs

import matplotlib.pyplot as plt

[5]:

def _download_file(ftp:str, filename:str, target_directory:str):

print(f"Download file: {filename}")

try:

local_filepath = os.path.join(target_directory, filename)

with open(local_filepath, 'wb') as file:

ftp.retrbinary('RETR %s' % filename, file.write)

return local_filepath

except Exception as e:

print(f"Error downloading {filename}: {e}")

def _get_last_version_filename(filenames):

versions = {int(f[-5:-3]): f for f in filenames}

return versions[max(versions.keys())]

def _select_filename(filenames, only_last):

if not only_last: return filenames

return [_get_last_version_filename(filenames)]

def ftp_download_files(ftp_path, level, variant, cycle_numbers, half_orbits, output_dir, only_last=True):

""" Download half orbits files from AVISO's FTP Server.

Args:

ftp_path

path of the FTP fileset

level

L2 or L3

variant

Basic, Expert, WindWave or Unsmoothed

cycle_numbers

list of cycles numbers

half_orbits

list of passes numbers

output_dir

output directory

only_last

if True (Default), downloads only the last version of a file if several versions exist.

Downloads all versions otherwise.

Returns:

The list of local files.

"""

# Set up FTP server details

ftpAVISO = 'ftp-access.aviso.altimetry.fr'

try:

# Logging into FTP server using provided credentials

with ftplib.FTP(ftpAVISO) as ftp:

ftp.login(username, password)

ftp.cwd(ftp_path)

print(f"Connection Established {ftp.getwelcome()}")

downloaded_files = []

for cycle in cycle_numbers:

cycle_str = '{:03d}'.format(cycle)

cycle_dir = f'cycle_{cycle_str}'

print(ftp_path+cycle_dir)

ftp.cwd(cycle_dir)

for half_orbit in half_orbits:

half_orbit_str = '{:03d}'.format(half_orbit)

pattern = f'SWOT_{level}_LR_SSH_{variant}_{cycle_str}_{half_orbit_str}'

filenames = []

try:

filenames = ftp.nlst(f'{pattern}_*')

# No version in L3 filenames

if level=="L3": only_last=False

filenames = _select_filename(filenames, only_last)

except Exception as e:

print(f"No pass {half_orbit}")

local_files = [_download_file(ftp, f, output_dir) for f in filenames]

downloaded_files += local_files

ftp.cwd('../')

return downloaded_files

except ftplib.error_perm as e:

print(f"FTP error: {e}")

except Exception as e:

print(f"Error: {e}")

def _normalized_ds(ds, lon_min, lon_max):

lon = ds.longitude.values

lon[lon < lon_min] += 360

lon[lon > lon_max] -= 360

ds.longitude.values = lon

return ds

def _subset_ds(file, group, variables, lon_range, lat_range):

swot_ds = xr.open_dataset(file, group=group)

swot_ds = swot_ds[variables]

swot_ds.load()

ds = _normalized_ds(swot_ds.copy(), -180, 180)

mask = (

(ds.longitude <= lon_range[1])

& (ds.longitude >= lon_range[0])

& (ds.latitude <= lat_range[1])

& (ds.latitude >= lat_range[0])

).compute()

swot_ds_area = swot_ds.where(mask, drop=True)

for var in list(swot_ds_area.keys()):

swot_ds_area[var].encoding = {'zlib':True, 'complevel':5}

return swot_ds_area

def _to_netcdf(ds_root, ds_left, ds_right, output_file):

""" Writes the dataset to a netcdf file """

ds_root.to_netcdf(output_file)

ds_right.to_netcdf(output_file, group='right', mode='a')

ds_left.to_netcdf(output_file, group='left', mode='a')

def _subset_grouped_ds(file, variables, lon_range, lat_range, output_dir):

print(f"Subset dataset: {file}")

ds_root = xr.open_dataset(file)

swot_ds_right = _subset_ds(file, 'right', variables, lon_range, lat_range)

swot_ds_left = _subset_ds(file, 'left', variables, lon_range, lat_range)

if swot_ds_right.sizes['num_lines'] == 0 and swot_ds_left.sizes['num_lines'] == 0:

print(f'Dataset {file} not matching geographical area.')

return None

filename = f"subset_{os.path.basename(file)}"

print(f"Store subset: {filename}")

filepath = os.path.join(output_dir, filename)

_to_netcdf(ds_root, swot_ds_left, swot_ds_right, filepath)

return filepath

def subset_files(filenames, variables, lon_range, lat_range, output_dir):

""" Subset datasets with geographical area.

Args:

filenames

the filenames of datasets to subset

variables

variables to select

lon_range

the longitude range

lat_range

the latitude range

output_dir

output directory

Returns:

The list of subsets files.

"""

return [subset_file for subset_file in [_subset_grouped_ds(f, variables, lon_range, lat_range, output_dir) for f in filenames] if subset_file is not None]

def _interpolate_coords(ds):

lon = ds.longitude

lat = ds.latitude

shape = lon.shape

lon = np.array(lon).ravel()

lat = np.array(lat).ravel()

dss = xr.Dataset({

'longitude': xr.DataArray(

data = lon,

dims = ['time']

),

'latitude': xr.DataArray(

data = lat,

dims = ['time'],

)

},)

dss_interp = dss.interpolate_na(dim="time", method="linear", fill_value="extrapolate")

ds['longitude'] = (('num_lines', 'num_pixels'), dss_interp.longitude.values.reshape(shape))

ds['latitude'] = (('num_lines', 'num_pixels'), dss_interp.latitude.values.reshape(shape))

def normalize_coordinates(ds):

""" Normalizes the coordinates of the dataset : interpolates Nan values in lon/lat, and assign lon/lat as coordinates.

Args:

ds: the dataset

Returns:

xr.Dataset: the normalized dataset

"""

_interpolate_coords(ds)

return ds.assign_coords(

{"longitude": ds.longitude, "latitude": ds.latitude}

)

Parameters

Define existing output folder to save results:

[6]:

output_dir = "downloads"

Authentication parameters

Enter your AVISO+ credentials

[7]:

username = input("Enter username:")

Enter username: aviso-swot@altimetry.fr

[10]:

password = getpass(f"Enter password for {username}:")

Enter password for aviso-swot@altimetry.fr: ········

Data parameters

Define the variables you want:

[11]:

variables = ['latitude', 'longitude', 'time', 'sig0_karin_2', 'ancillary_surface_classification_flag', 'ssh_karin_2', 'ssh_karin_2_qual']

Define a geographical area

[12]:

# California

lat_range = 35, 42

lon_range = -127, -121

localbox = [lon_range[0], lon_range[1], lat_range[0], lat_range[1]]

localbox

[12]:

[-127, -121, 35, 42]

Define the FTP filepath

[14]:

ftp_path = '/swot_products/l2_karin/l2_lr_ssh/PIC2/Unsmoothed/'

# For selecting files with a regex pattern

variant = "Unsmoothed"

level = "L2"

Define data parameters

Note

Passes matching a geographical area and period can be found using this tutorial

[15]:

# Define cycles and half_orbits numbers to download

cycle_numbers = [23]

pass_numbers = [11, 24, 39, 52, 67, 274, 289, 302, 317]

Download files through FTP

[16]:

downloaded_files = ftp_download_files(ftp_path, level, variant, cycle_numbers, pass_numbers, output_dir, only_last=True)

Connection Established 220 192.168.10.119 FTP server ready

/swot_products/l2_karin/l2_lr_ssh/PIC2/Unsmoothed/cycle_023

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_011_20241022T144002_20241022T153050_PIC2_01.nc

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_024_20241023T014851_20241023T024018_PIC2_01.nc

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_039_20241023T144034_20241023T153122_PIC2_01.nc

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_052_20241024T014922_20241024T024049_PIC2_01.nc

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_067_20241024T144105_20241024T153152_PIC2_01.nc

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_274_20241101T001035_20241101T010202_PIC2_03.nc

No pass 289

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_302_20241102T001106_20241102T010233_PIC2_01.nc

Download file: SWOT_L2_LR_SSH_Unsmoothed_023_317_20241102T130249_20241102T135416_PIC2_01.nc

[17]:

downloaded_files

[17]:

['downloads/SWOT_L2_LR_SSH_Unsmoothed_023_011_20241022T144002_20241022T153050_PIC2_01.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_024_20241023T014851_20241023T024018_PIC2_01.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_039_20241023T144034_20241023T153122_PIC2_01.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_052_20241024T014922_20241024T024049_PIC2_01.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_067_20241024T144105_20241024T153152_PIC2_01.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_274_20241101T001035_20241101T010202_PIC2_03.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_302_20241102T001106_20241102T010233_PIC2_01.nc',

'downloads/SWOT_L2_LR_SSH_Unsmoothed_023_317_20241102T130249_20241102T135416_PIC2_01.nc']

Subset data in the required geographical area

[18]:

subset_filenames = subset_files(downloaded_files, variables, lon_range, lat_range, output_dir)

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_011_20241022T144002_20241022T153050_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_011_20241022T144002_20241022T153050_PIC2_01.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_024_20241023T014851_20241023T024018_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_024_20241023T014851_20241023T024018_PIC2_01.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_039_20241023T144034_20241023T153122_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_039_20241023T144034_20241023T153122_PIC2_01.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_052_20241024T014922_20241024T024049_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_052_20241024T014922_20241024T024049_PIC2_01.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_067_20241024T144105_20241024T153152_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_067_20241024T144105_20241024T153152_PIC2_01.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_274_20241101T001035_20241101T010202_PIC2_03.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_274_20241101T001035_20241101T010202_PIC2_03.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_302_20241102T001106_20241102T010233_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_302_20241102T001106_20241102T010233_PIC2_01.nc

Subset dataset: downloads/SWOT_L2_LR_SSH_Unsmoothed_023_317_20241102T130249_20241102T135416_PIC2_01.nc

Store subset: subset_SWOT_L2_LR_SSH_Unsmoothed_023_317_20241102T130249_20241102T135416_PIC2_01.nc

[19]:

subset_filenames

[19]:

['downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_011_20241022T144002_20241022T153050_PIC2_01.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_024_20241023T014851_20241023T024018_PIC2_01.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_039_20241023T144034_20241023T153122_PIC2_01.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_052_20241024T014922_20241024T024049_PIC2_01.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_067_20241024T144105_20241024T153152_PIC2_01.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_274_20241101T001035_20241101T010202_PIC2_03.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_302_20241102T001106_20241102T010233_PIC2_01.nc',

'downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_317_20241102T130249_20241102T135416_PIC2_01.nc']

Visualise data on a pass

Open a pass dataset

[20]:

subset_file = "downloads/subset_SWOT_L2_LR_SSH_Unsmoothed_023_317_20241102T130249_20241102T135416_PIC2_01.nc"

[21]:

ds_left = xr.open_dataset(subset_file, group="left")

[22]:

ds_right = xr.open_dataset(subset_file, group="right")

Interpolate coordinates to fill Nan values in latitude and longitude, and assign them as coordinates.

[23]:

ds_left = normalize_coordinates(ds_left)

ds_right = normalize_coordinates(ds_right)

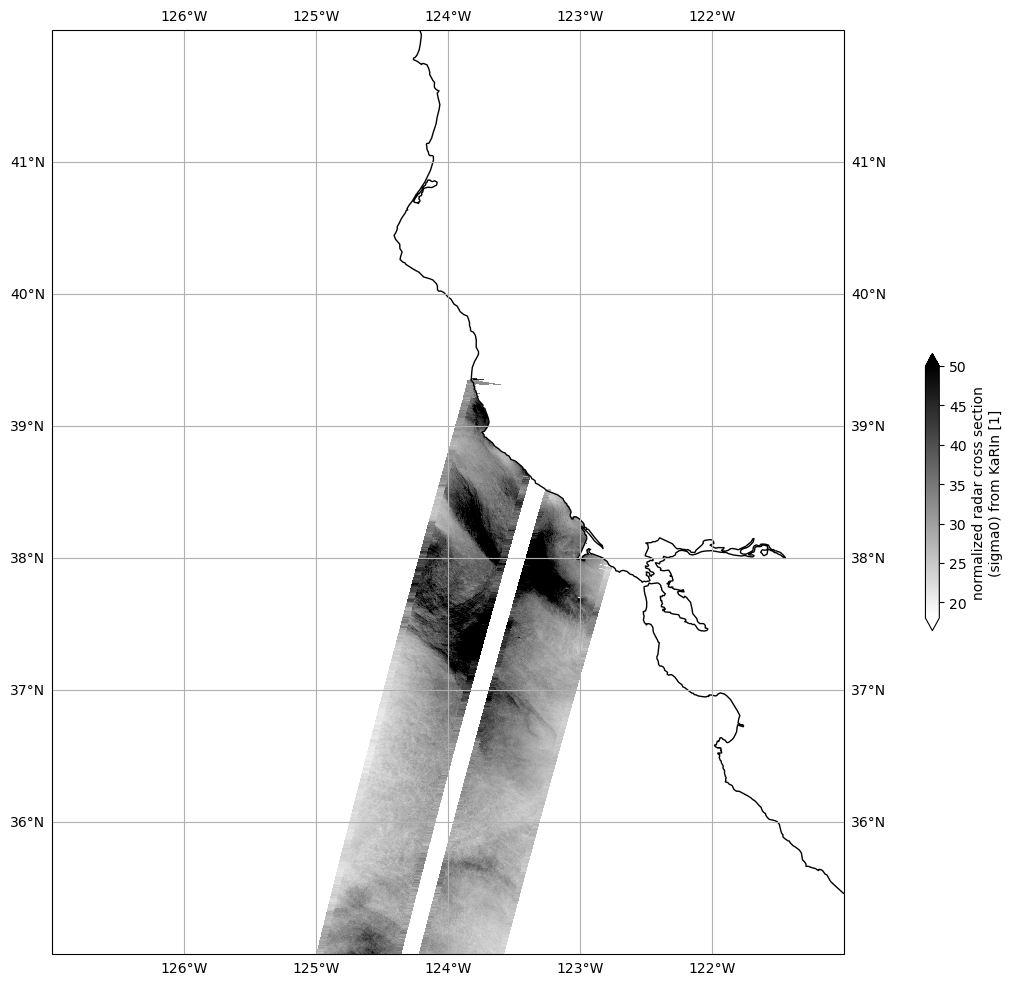

Plot Sigma 0

Mask invalid data

[24]:

for dss in ds_left, ds_right:

dss["sig0_karin_2"] = dss.sig0_karin_2.where(dss.ancillary_surface_classification_flag==0)

dss["sig0_karin_2"] = dss.sig0_karin_2.where(dss.sig0_karin_2 < 1e6)

#dss["sig0_karin_2_log"] = 10*np.log10(dss["sig0_karin_2"])

Plot data

[30]:

plot_kwargs = dict(

x="longitude",

y="latitude",

cmap="gray_r",

vmin=5,

vmax=40,

)

fig, ax = plt.subplots(figsize=(21, 12), subplot_kw=dict(projection=ccrs.PlateCarree()))

ds_left.sig0_karin_2.plot.pcolormesh(ax=ax, cbar_kwargs={"shrink": 0.3}, **plot_kwargs)

ds_right.sig0_karin_2.plot.pcolormesh(ax=ax, add_colorbar=False, **plot_kwargs)

ax.gridlines(draw_labels=True)

ax.coastlines()

ax.set_extent(localbox, crs=ccrs.PlateCarree())

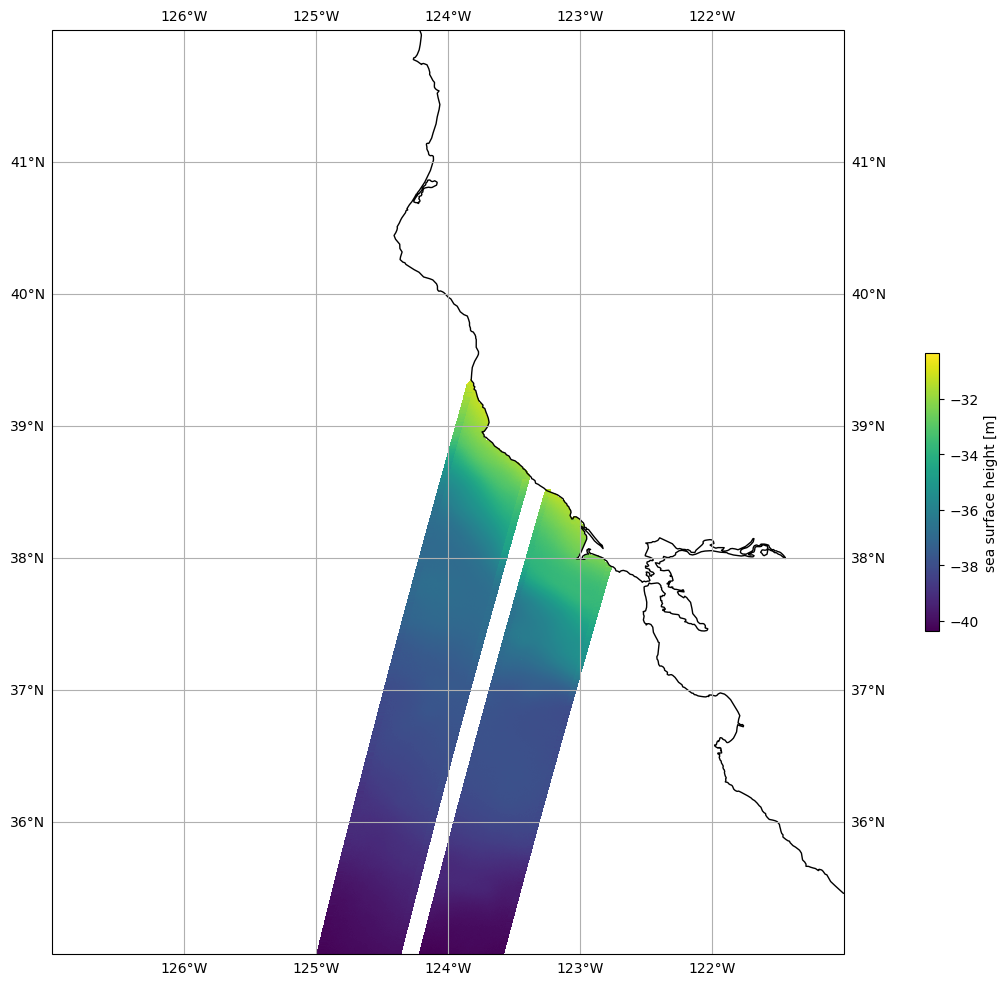

Plot SSHA

Mask invalid data

[26]:

for dss in ds_left, ds_right:

dss["ssh_karin_2"] = dss.ssh_karin_2.where(dss.ancillary_surface_classification_flag==0)

dss["ssh_karin_2"] = dss.ssh_karin_2.where(dss.ssh_karin_2_qual==0)

Plot data

[27]:

plot_kwargs = dict(

x="longitude",

y="latitude"

)

fig, ax = plt.subplots(figsize=(21, 12), subplot_kw=dict(projection=ccrs.PlateCarree()))

ds_left.ssh_karin_2.plot.pcolormesh(ax=ax, cbar_kwargs={"shrink": 0.3}, **plot_kwargs)

ds_right.ssh_karin_2.plot.pcolormesh(ax=ax, add_colorbar=False, **plot_kwargs)

ax.gridlines(draw_labels=True)

ax.coastlines()

ax.set_extent(localbox, crs=ccrs.PlateCarree())

[ ]: